That! The Haiku and the Sonnet.

Zen and the art of interpreting the creative outputs of LLMs.

Cheap reasoning models (e.g. Deepseek r1 [mentioned for engagement purposes]), with chain-of-thought output make it extremely tempting to personify LLMs or their outputs. Similarly, certain models such as Claude (in all its forms) have gained a cult-of-personality type following, where users are more likely to use it for its feel (gained through preferential fine-tuning, a muted colour scheme, a hand drawn logo, or all of the above). Unknown to them, an increasingly large number of users are assigning meaning to the result of a computation. When I refer to meaning in this article I don’t refer to it in the sense of whether something is poetic or accurate or profound, but in a way rather more related to the word meaningful. Related in some sense to something deeper, that we empathise with.

AGI is an unquantifiable threshold, and whether an LLM reaches this will be decided based on a collective reading of the outputs that are produced. That is why the model cannot be separated from the surrounding infrastructure. The colour scheme, the point of interaction, the UI, even the very act of ‘chatting’ all contribute in some sense to our own reading, and whether we choose to recognise an other.

For the purposes of this post, which I am writing in Tokyo Station, I will un-pick the Western endeavour to seek a deeper meaning and state that the outputs from an LLM are meaningless. I mean this in the sense that inferred tokens originates from a soul-less computer. We may impose a meaning on them, but in their most abstracted form, they are the result of computations.

Drawing a literary comparison, the skill in reading a haiku is to not read any meaning into it, but to let it wash over you. Should the same be said for an LLM (that truly does have no meaning in the first place)? Should we let output tokens wash over us like a haiku in accordance to Zen? We turn to Roland Barthes’ reading of haiku in Empire of Signs:

Here meaning is only a flash, a slash of light: When the light of sense goes out, but with a flash that has revealed the invisible world, Shakespeare wrote; but the haiku's flash illumines, reveals nothing; it is the flash of a photograph one takes very carefully (in the Japanese manner) but having neglected to load the camera with film. Or again: haiku reproduces the designating gesture of the child pointing at whatever it is (the haiku shows no partiality for the subject), merely saying: that! with a movement so immediate (so stripped of any mediation: that of knowledge, of nomination, or even of possession) that what is designated is the very inanity of any classification of the object: nothing special, says the haiku, in accordance with the spirit of Zen …

This passage shares so much with how we really know LLMs to work (which is different from how we wish LLMs worked, which is to think), tokens appearing in an instant from the void. But at the same time highlights just how disparate our readings of LLMs are when compared to something like a haiku (again, to be expected because we assign a value towards an other, but it is important to consider that this importance is ‘created’ in order to process the meaninglessness of an LLM).

This is further amplified by how we interact with LLMs. When people refer to models such as Anthropic’s Claude, they refer not just to the set of weights, or the data centre, but to the personality contained (again, we pretend that this is the case) within the output, which is defined by the overall experience. The UI, the colour scheme, the typeface, even the act of ‘chatting’ is to impose a dialogue with an other. These aspects combined make it irresistible to place meaning where there is none. Either AGI will refer to a holistic experience, or we must treat how we interact with LLMs fundamentally differently.

How do we get around this imposition of our own values onto something meaningless, in effect, to un-interpret the haiku and let it wash over us. Referring back to Barthes, we learn of different approaches, hundreds of years old:

[…] the haiku has the purity, the sphericality, and the very emptiness of a note of music; perhaps that is why it is spoken twice, in echo; to speak this exquisite language only once would be to attach a meaning to surprise, to effect, to the suddenness of perfection; to speak it many times would postulate that meaning is to be discovered in it, would simulate profundity; between the two, neither singular nor profound, the echo merely draws a line under the nullity of meaning.

Performing LLM inference multiple times (different temperature values?) may help us to de-personify outputs, enabling us to appreciate the content (but not assign meaning to it). However, very quickly we enter into the space of what I will call the Muji paradox (highlighted to me by Sunil).

Muji: in English ‘no-brand’ is the antithesis of a brand. It embodies the same values found within a haiku though in a commercial sense, not a literary one. The complete lack of meaning. It is important to note though that from a Western perspective, it has failed. Paradoxically, we have associated an aesthetic with it, a quality, and a set of values, in exactly the same way we have done for LLMs.

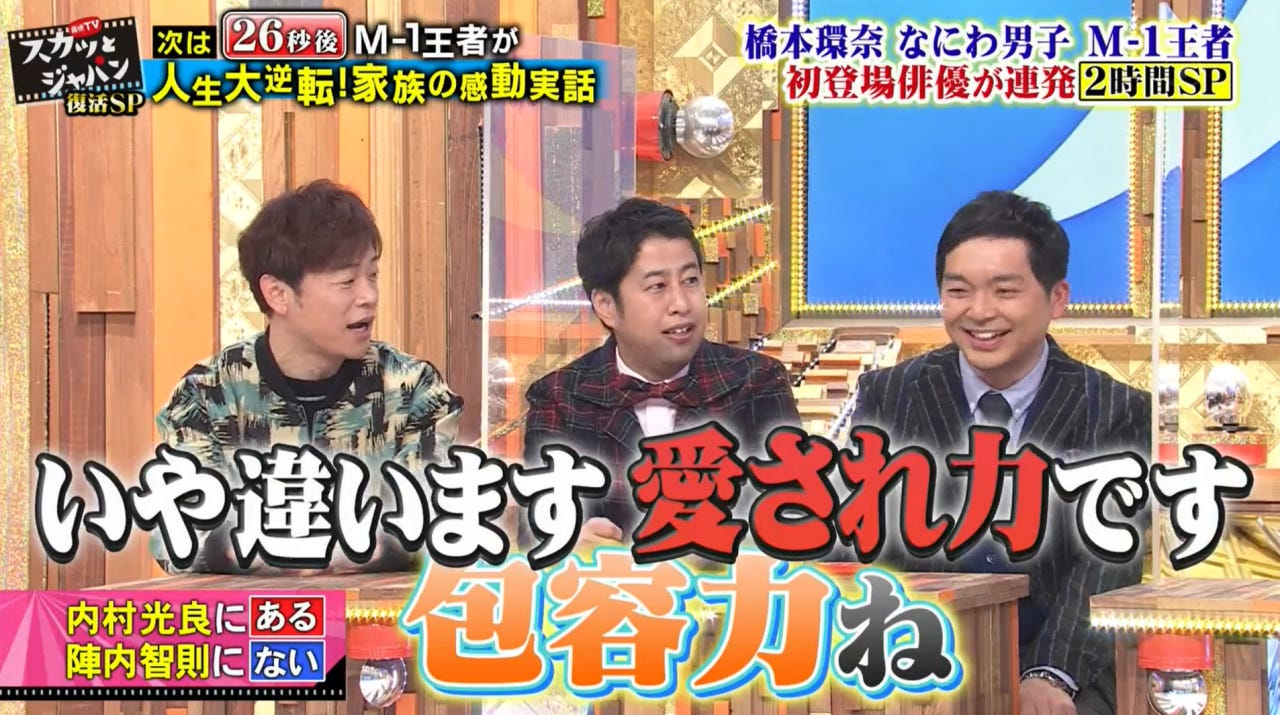

An alternative to doing nothing at all, would be to do everything at once. As an example of this, Japanese television exists, and often consists of a dizzying array of fonts and colours. It becomes so overwhelming that you are forced to assign no meaning to the text at all, it simply exists. Read nothing into how it is presented, because it will change. Japanese television in a sense embodies the idea of Zen equally as well as Muji does.

I propose that to provoke an alternate reading of LLMs, in opposition to the Western AGI-seeking viewpoint, that inevitably converges towards chatting with an other, we treat creative outputs more like haikus. As Barthes’ says, like the flash of a photograph one takes very carefully but having neglected to load the camera with film. This may mean taking the Muji route and attempting to strip any aesthetic, or imposition of values from an LLM (obviously it is not in the interest of an AI company to do this). OpenAI probably has the ‘blandest’ aesthetic, but like Muji, even this has become meaning-full. An alternative would be, like reading a haiku, to present tokens multiple times, similar to Raymond Queneau’s ‘A Hundred Thousand Billion Poems’. Interacting with an LLM may feel closer to watching a Japanese TV show. So overwhelming in alternative tokens, typeface, colour, conflicting aesthetics, even mode of interaction (RE: chatting), that we are forced to strip any emotion we might infer.

Maybe that’s why we’re struggling to process AI and art, and why the concept of AGI is so messy and intangible. Because we’re imposing a meaning on something that clearly has none (or even worse, imposing a meaning on something that we’ve already imposed a meaning to in the case of Claude). If we embrace this more Buddhist perspective, it may unlock an alternate reading, complimentary to our own AGI-centric one, mirroring the individual dividing lines of the West/East, the Haiku and the Sonnet.